kreuzwerker has developed a methodology to assess the cloud native maturity of businesses with substantial operations in the AWS Cloud. This methodology identifies gaps in cloud nativeness and determines if the right preconditions for full cloud native adoption are present. The result is a cloud native Score, subdivided into categories and measured against business values. This is combined with an extensive report that includes recommendations, prioritized actions, and KPIs.

This methodology evaluates the level of cloud native maturity of existing cloud operations, including both cultural components and processes that are essential for becoming cloud native. The goal is to quantify and capture the current maturity through a cumulative score. This score serves as a baseline for future assessments to track progress or for comparison against other companies. More details on the scoring system will be provided later.

Why Cloud Native?

The main idea behind being cloud native is to leverage the benefits of the cloud optimally, aligning them with business values and processes. This alignment translates into tangible solutions and improvements. Being cloud native enables a company to be more agile, faster, flexible, and innovative. It leads to shorter time-to-market, data-driven decision-making, and very lean, self-organizing processes.

Our methodology therefore offers a comprehensive view of cloud native maturity, focusing not only on technology but also on people, culture, product management, teams, and processes. This includes aspects such as decision-making, experimentation, collaboration, education, and knowledge sharing. But before we proceed, let’s first define what ‘cloud native’ actually means. While there are many definitions available, we have distilled it to the following core concept:

Focusing on self-enabling teams through continuous automated delivery and testing with reduced operational overhead

Definition

The above is a simple definition, yet it carries many implications. This seemingly straightforward statement can trigger a snowball effect, leading to numerous implicit consequences. The extent to which these consequences manifest within an organization defines its cloud native maturity. Below, I present a condensed overview of these consequences. But be warned – this explanation is not for the faint of heart.

The Snowball Effect

The brief statement “Focusing on self-enabling teams through continuous automated delivery and testing with reduced operational overhead“ carries significant implicit implications. Firstly, continuous delivery by multiple teams suggests that codependent workloads can be developed and deployed independently. This implies that a fault-tolerant delivery for codependent workloads is ensured through continuous testing, enabling teams to fully own the process of building and running their workload in productive environments. In turn, this implies that operational overhead is maximally reduced through automated processes. Continuous delivery also indicates that changes are rolled out in small batches to streamline the automated delivery and testing process. This approach means that testing becomes foundational to development, as code cannot be accepted without it. The emphasis on small batches of changes suggests that larger changes should be de-risked by first prototyping them and by gathering real-world metrics before full implementation. The practice of prototyping and comparing different options and outcomes hints at a culture of innovation, which in turn implies support for this culture at the organizational level.

Additionally, fully automated pipelines imply that in cloud-native development, infrastructure dependencies are covered or included in the development environment. This also means that the propagation to other environments, including production, takes these infrastructural dependencies into account. Ideally, infrastructural updates can be triggered from the application pipelines, necessitating the organization of infrastructure code into clear verticals to facilitate this process. For reduced overhead, services requiring minimal or no additional provisioning or configuration are preferred, hence managed services or serverless architecture should prevail. Additionally, this suggests that there are infrastructure pipelines in place to automate the rollouts of infrastructure components. This also implies that testing is in place to ensure these rollouts work as expected.

Business Relevance

We could certainly expand on this topic for quite some time, but this simply demonstrates that our streamlined definition implicitly encompasses the entire spectrum of cloud nativeness. It’s important to note, however, that as with any all-encompassing definition, this represents an idealized, utopian situation. Real-world scenarios invariably involve trade-offs, dictated by practical limitations such as time, financial resources, and human capital.

This is why the methodology not only identifies gaps, but also allows for a partial focus on the most business-relevant topics. By mapping the scores to business values and prioritizing these values, it becomes easier to target specific areas for attention. This approach means that certain topics can be parked or even redefined according to specific business needs. Take, for instance, the cloud-native paradigm of using microservices. Not every company or solution benefits from a microservice architecture, especially if the effort is too great for a feasible payoff. In such scenarios, it does not make sense to place too much emphasis on this particular architectural decision, as it does not align with the business value.

Methodology

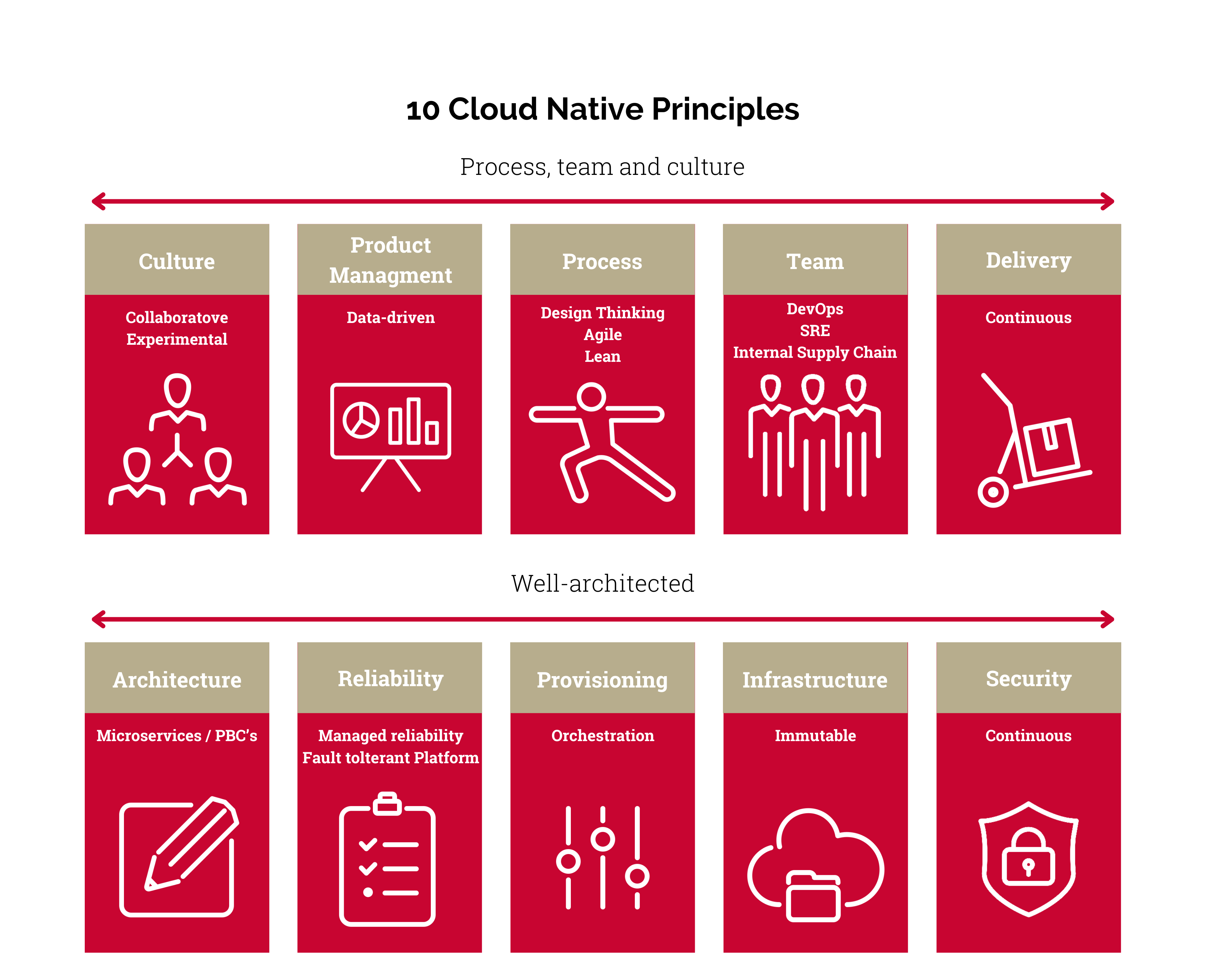

We have defined ten design principles that contribute equally to the cloud native maturity of an organization. As previously mentioned, our challenge was to encompass not only technology topics but also operations, processes, and less tangible aspects such as culture and collaboration. The latter are vital preconditions for establishing cloud native maturity. For an overview of the ten pillars, refer to figure 1.

Figure 1

Another challenge was to derive quantified results by generating an overall index score. A crucial aspect of this maturity assessment is enabling organizations to establish a baseline for comparison. This index can be used to take interval snapshots to track measurable progress.

To achieve this, each design principle was divided into paradigms, and each paradigm further into a set of best practices. The assessment is conducted through a series of interviews with a selection of stakeholders from all organizational ranks, primarily targeting those in roles with active and substantial expert knowledge.

The scoring is performed by presenting the best practices as propositions. Participants are then asked to rate the extent to which each proposition applies to their situation on a scale. Prior to the assessment, a careful selection of participants is advised, so topics are equally covered despite different exposure levels to avoid contaminating the score with uninformed responses.

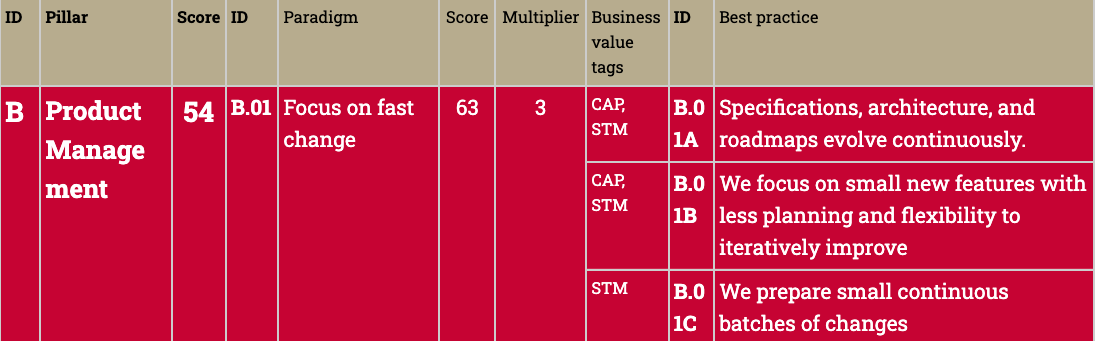

The scores of the best practices can then be cumulated into paradigm scores, and these cumulative paradigm scores can be further aggregated into pillar scores, which in turn are combined to form the overall index score.

Additionally, extra weight can be assigned to individual best practices and paradigms. This is achieved by applying a multiplier, which gives certain best practice scores more weight than others within the same paradigm, or between paradigms within the same pillar. The process starts by defining business values that can be tagged to best practices and paradigms. The tagging system then automatically applies multipliers based on these prioritized business values. By emphasizing these business values, an alternative perspective can also be derived from the index, offering insights not just based on individual pillars, but across multiple pillars.

See an example in figure 2.

Figure 2 (to be graphically enhanced)

Business values should be derived from the organization’s mission and can be tailored to specific needs. Example business values that were used in the past are: Innovation and Experiment, Speed To Market (STM) , Collaboration and Productivity (CAP), Competitive Advantage, High Availability and Reliability, Global Reach, Security and Compliance, Reduced Time To Recovery, Reduced Operational Overhead, Resource Optimization, etcetera.

Conclusion

The kreuzwerker methodology for assessing cloud native maturity offers a holistic approach, encompassing technology, operations, culture, and more. With ten design pillars, detailed through paradigms and best practices, this method provides a structured, yet flexible framework for evaluation. The scoring system, adaptable with weighted factors based on business values, not only establishes a baseline, but also tracks progress over time. Acknowledging the aspirational nature of cloud nativeness, our methodology realistically addresses the trade-offs inherent in the cloud-native environment. It’s a dynamic tool that guides organizations in understanding and enhancing their cloud capabilities, aligning with both current and future business and technological landscapes.

For a full demonstration or additional information please contact us.